Hello!

How have you been? I’ve been keeping busy with work at crikey dot com dot au and a stealth mode project, but here’s something I thought might interest you.

Do you know who owns your medical data? I’m talking about scans of your body, your doctor’s scribbled notes, information about what you’ve contracted and which specialists you’ve seen. Everything that’s required to know intimate details of your life that you don’t even share with your friends and family.

What are companies are allowed to do with your data? And, even more importantly, do you know what they’re actually doing with it?

Do you have any tips/leaks/story ideas? Please get in touch, confidentiality guaranteed.

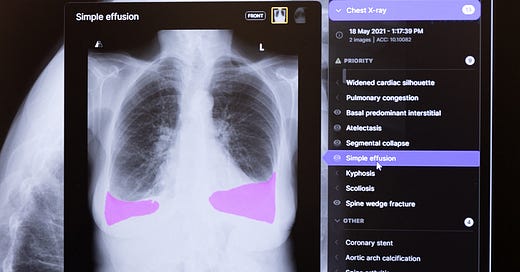

That’s the crux of the story I’ve been chasing about Australia’s largest medical imaging provider I-MED providing chest x-rays of potentially hundreds of thousands of Australians to health tech company harrison.ai to train AI without their patients’ knowledge.

Since reporting on it two weeks ago, harrison.ai has told its investors that concerns about patient consent are I-MED’s problem, not theirs; Australia’s privacy regulator has given a “please explain” to I-MED, and I-MED has suffered a data breach exposing the data of tens of thousands (if not more) patients to anyone who knew where to look. It’s been picked up or followed by quite a few outlets.

Putting aside the corporate intrigue — this comes at an awkward time for both companies as harrison.ai is raising money and I-MED is looking for a seller — this is really about two things: who can access and control information about you, and what’s the impact (and value) of this data. They are sides of the same coin.

A lot of people have got in touch saying they think their data is caught up in this without their knowledge, that they have been disgusted about what they’ve learned, and they want to know more. There’s also been a few who’ve said they don’t mind whatever the companies have done, given that both seem to be doing an important service either providing imaging or creating a tool to help radiologists to do their job better.

I can understand looking at this and thinking “seems like a good use of my data”. Not everyone agrees, but it’s not an unimaginable view. But you should care about this even if you approve of this use case because this is a story that’s about the process, not the outcome, of using your medical data.

If you think harrison.ai and I-MED are above reproach, what’s to stop I-MED giving access to your medical data to a dodgy company? Or another imaging place doing the same thing? Given how slapdash this is, how would we know if it was happening? Perhaps it’s already is.

Both companies say everything they’ve done is above board. As I’ve noted repeatedly, neither companies have been particularly forthcoming about the details of everything they’ve done.

It’s very much in the public interest to know the details of how these companies have handled your sensitive health information. I am surprised they do not feel an obligation to give more information, given the trust both businesses depend on to operate. Regardless of what they’ve disclosed, I’m going to do more reporting to find out more because I think people deserve to know what’s happening, one way or another. (And if you know anything, please get in touch, confidentiality guaranteed).

Would love to hear what you think about the story! If you reply, I promise I will read and respond.

Lots of love,

Cam

It's the same with all these AI training stories: Engage with the people affected by the use of the data first, then decide how to progress. This isn't, ever, how things occur -- but this is how they should occur.

Of course, it's no surprise you're getting donuts from the companies and they don't want to talk to you. That's digging a hole for themselves. But keep going!!!!

I've had pretty regular chest and/or spinal x-rays etc through I-MED for probably 15 years now. I don't care if my x-rays are "de-identified", due to my medical history they're almost certainly unique enough that they could be connected in series which is a weird and uncomfortable feeling.